The concept of the Metaverse, a persistent, shared, 3D virtual space, has transitioned from science fiction to a tangible technological goal. Microprocessor Metaverse

It promises a seamless convergence of the physical and digital worlds, offering experiences that range from collaborative work and education to high-fidelity entertainment and social interaction.

However, this ambitious vision is fundamentally dependent on a single, critical component: the microprocessor.

Microprocessors, in their various forms—Central Processing Units (CPUs), Graphics Processing Units (GPUs), and specialized accelerators—are the computational engines that power every aspect of the immersive experience.

They are responsible for rendering complex virtual environments, processing real-time sensor data, managing low-latency network communication, and executing the sophisticated artificial intelligence (AI) that brings virtual worlds to life.

Without a revolution in silicon design and performance, the Metaverse would remain a choppy, unconvincing, and ultimately unusable concept [1].

This article provides an in-depth technical analysis of the microprocessor’s indispensable role, examining the architectural demands, the critical performance metrics, and the specialized processing required to transform the dream of the Metaverse into a smooth, responsive, and truly immersive reality.

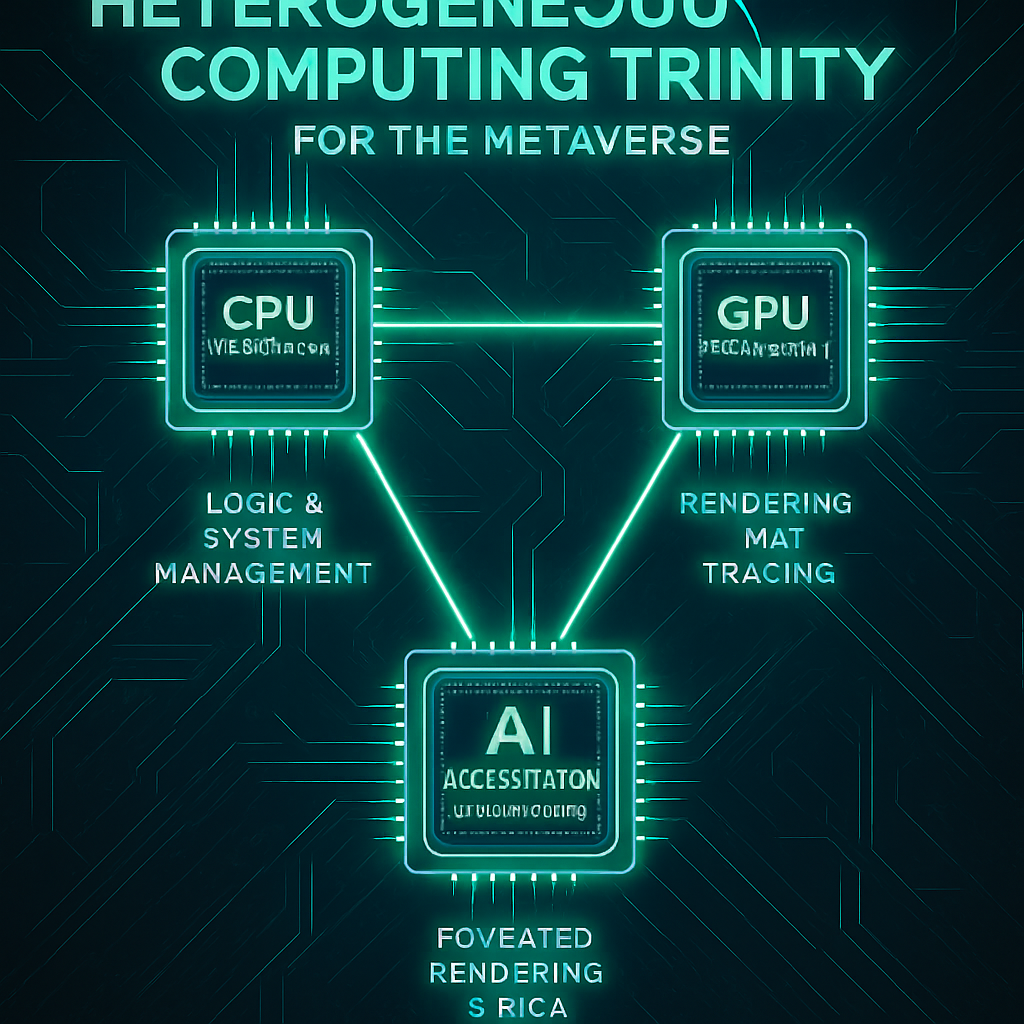

The Heterogeneous Computing Trinity: CPU, GPU, and AI Accelerator

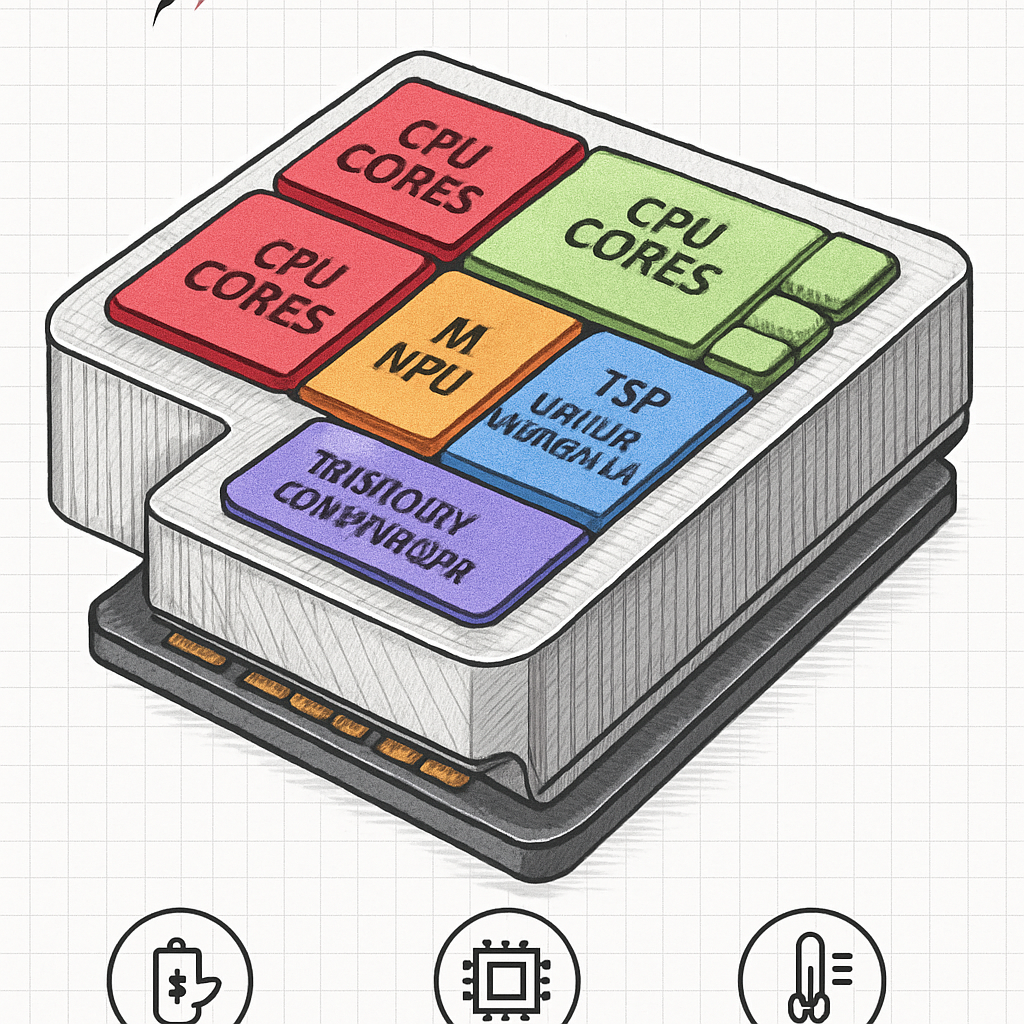

The computational demands of the Metaverse are too vast and varied for any single type of processor to handle efficiently.

Instead, modern immersive systems rely on a heterogeneous computing architecture, a powerful trinity of specialized processors working in concert to manage the diverse workload [2].

The Central Processing Unit (CPU): The Orchestrator

The CPU serves as the brain and orchestrator of the entire system.

While it often takes a backseat to the GPU in terms of raw graphical output, its role is crucial for managing the non-graphical aspects of the virtual world.

The CPU handles the operating system, application logic, network stack, and the overall management of the virtual environment.

In a complex, multi-user Metaverse, the CPU is responsible for executing the game logic or simulation logic, which includes collision detection, physics calculations for non-graphical objects, and managing the state of thousands of concurrent users and objects [3].

For high-performance VR systems, the CPU often prioritizes high clock speeds over a massive core count.

This is because many core simulation and rendering pipeline tasks are sequential and benefit most from fast single-thread performance, ensuring that the system can quickly respond to user input and maintain the critical frame rate necessary for immersion.

The Graphics Processing Unit (GPU): The Visual Engine

The GPU is arguably the most recognizable workhorse of the immersive experience.

Its architecture, built on thousands of parallel cores, is perfectly suited for the massive, repetitive calculations required for rendering high-fidelity 3D graphics.

The GPU’s primary function is to transform the abstract data of a virtual world—polygons, textures, and lighting information—into the millions of colored pixels displayed to the user.

This process involves two major techniques: Rasterization and Ray Tracing [4].

Rasterization is the traditional method of converting 3D geometry into 2D pixels.

However, modern immersive experiences increasingly rely on hardware-accelerated Ray Tracing, which simulates the physical behavior of light to produce hyper-realistic reflections, refractions, and shadows.

Dedicated Ray Tracing cores (like NVIDIA’s RT Cores or AMD’s Ray Accelerators) are essential for achieving this visual fidelity in real-time, a non-negotiable requirement for a convincing Metaverse.

AI Accelerators (NPUs/TPUs): The Cognitive Core

The Metaverse is not just a visual space; it is an intelligent one.

AI chips, often referred to as Neural Processing Units (NPUs) or Tensor Processing Units (TPUs), are the cognitive engines that power the smart elements of the virtual world.

These specialized processors are optimized for the matrix operations that form the core of machine learning algorithms.

Their role extends beyond simple graphics to include:

- AI-Driven Content Generation: Real-time generation of textures, non-player character (NPC) dialogue, and environmental details to reduce the load on the network and storage [5].

- Intelligent Agents: Powering sophisticated NPCs and virtual assistants that can understand natural language, adapt to user behavior, and provide personalized interactions.

- Foveated Rendering: A critical optimization technique where the AI accelerator tracks the user’s gaze and instructs the GPU to render only the small area the user is looking at in high resolution, while the peripheral vision is rendered at a lower quality. This dramatically reduces the overall computational load without sacrificing perceived visual quality.

The rise of custom AI silicon, such as Meta’s MTIA chips, underscores the industry’s recognition that specialized hardware is necessary to handle the massive AI inference and training workloads required to build and maintain a dynamic, intelligent Metaverse [6].

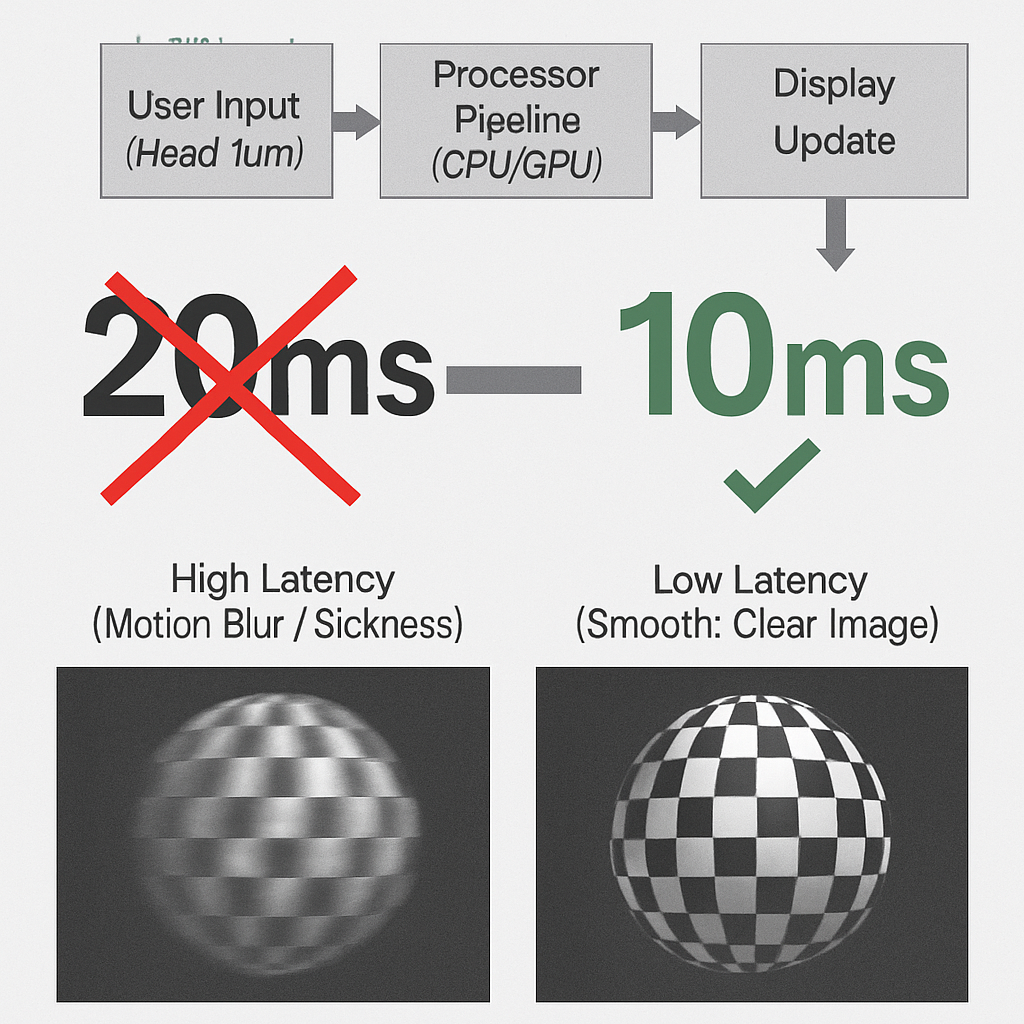

The Latency Imperative: The Non-Negotiable Requirement

Perhaps the single most critical challenge for microprocessors in the Metaverse is the Latency Imperative.

Latency, the delay between a user’s action (e.g., turning their head) and the system’s response (e.g., updating the display), must be minimized to an almost imperceptible level.

If the latency is too high, the visual information presented to the user will not match the input from their inner ear (vestibular system), leading to a phenomenon known as simulator sickness or cybersickness.

This is a major barrier to mass adoption of immersive technology [7].

The industry consensus is that the total render-to-photon latency—the time from sensor input to the light hitting the user’s eye—must be under 20 milliseconds (ms), with many high-end systems targeting 10 ms or less for a truly comfortable and seamless experience [8].

Microprocessors address this challenge through several hardware and architectural optimizations:

- High Clock Speeds: As mentioned, fast single-thread performance in the CPU is vital for quickly processing sequential tasks in the rendering pipeline.

- Pipelining and Parallelism: GPUs use highly optimized pipelines to ensure that data flows continuously and efficiently from geometry processing to final pixel output.

- Asynchronous Timewarp (ATW) and Spacewarp (ASW): These are software techniques executed by the processor that predict the user’s head movement and slightly adjust the displayed image based on the latest tracking data, even if the full frame hasn’t finished rendering. This “warping” process is computationally inexpensive but highly effective at reducing perceived latency [9].

The relentless pursuit of low latency dictates every design choice, from the choice of memory (high-bandwidth, low-latency VRAM) to the interconnects between the CPU and GPU.

Power, Portability, and the Rise of Custom SoCs

For the Metaverse to be truly ubiquitous, the hardware must be portable and untethered.

This shift from bulky, PC-tethered headsets to sleek, standalone devices like the Meta Quest or Apple Vision Pro introduces a severe constraint: power efficiency and thermal management.

A powerful processor that draws hundreds of watts and requires active, noisy cooling is simply not viable when strapped to a user’s face.

https://youtu.be/wAlcX7QaWkc?si=MEWyf0W4xxGSw9n8

The solution lies in the development of highly integrated, custom System-on-Chips (SoCs).

A custom SoC is essentially a miniature computer on a single piece of silicon, integrating the CPU, GPU, AI accelerator, memory controller, image signal processor (ISP), and connectivity modules.

This integration offers three major advantages [10]:

- Miniaturization: By combining all necessary components, the overall size and weight of the device are drastically reduced, improving comfort and wearability.

- Power Efficiency: Data transfer between components on the same chip is far more energy-efficient than transferring data across a motherboard. Furthermore, the entire chip can be custom-designed for maximum performance per watt, often leveraging the power-efficient Arm architecture.

- Thermal Management: A highly efficient SoC generates less heat, allowing for passive or minimal cooling solutions. This is crucial for a device worn directly against the skin, where excessive heat is not only uncomfortable but can also lead to performance throttling.

The design philosophy for Metaverse SoCs is a radical departure from traditional desktop processors, prioritizing efficiency and integration over raw, unconstrained power draw.

This is the foundation of the untethered, all-day immersive experience.

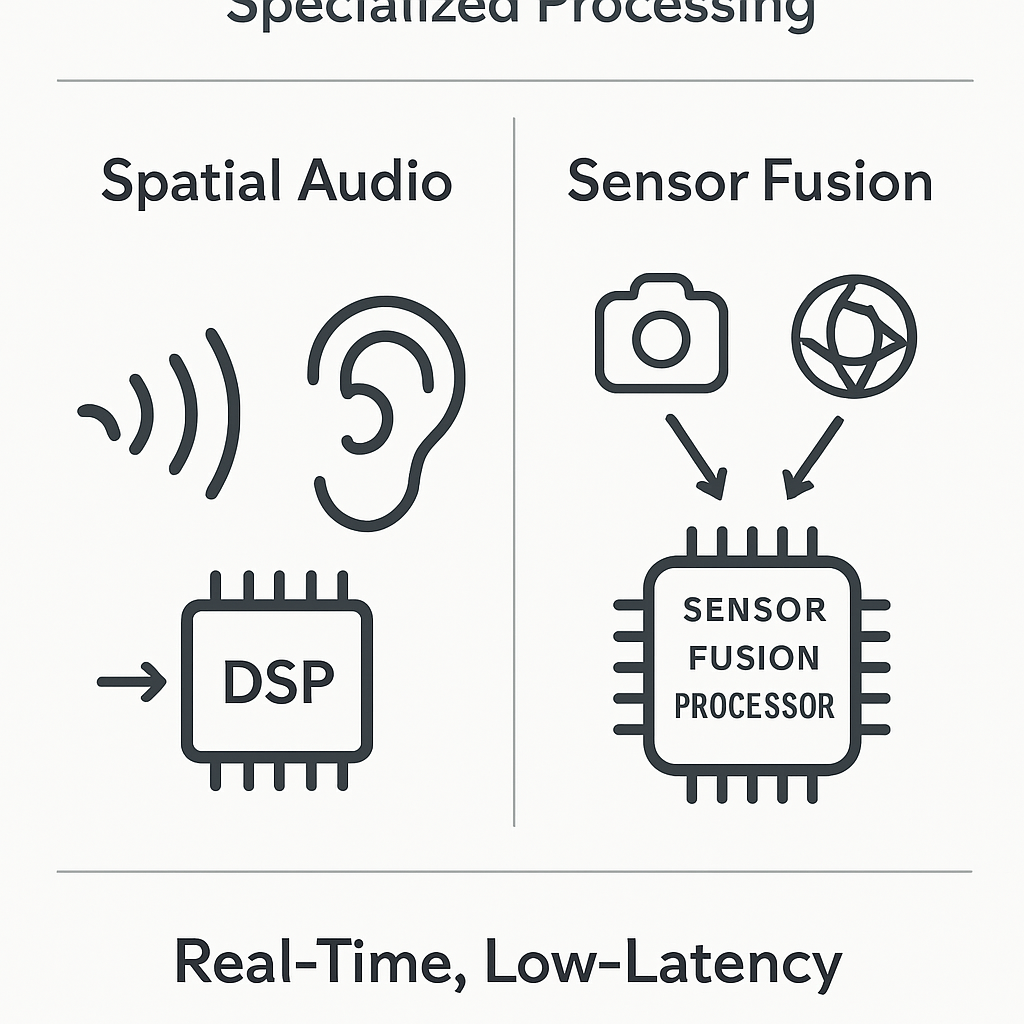

Beyond Graphics: Specialized Processing for Full Immersion

Immersion in the Metaverse is a multi-sensory experience that extends far beyond visual rendering.

Microprocessors are also responsible for the complex, real-time processing of sound, touch, and spatial awareness.

Spatial Audio Processing

A realistic virtual world requires sound that is not just heard, but placed.

Spatial audio tricks the human brain into perceiving sound sources as coming from specific locations in 3D space, which is vital for realism and navigation [11].

This is achieved through complex calculations involving Head-Related Transfer Functions (HRTFs), which model how sound waves are filtered by the shape of a person’s head, ears, and torso.

These calculations must be performed in real-time, based on the user’s head position and the virtual location of the sound source.

Dedicated Digital Signal Processors (DSPs) or specialized audio processing blocks within the SoC are tasked with this workload.

DSPs are highly efficient at the repetitive mathematical operations required for filtering and manipulating audio signals, ensuring that spatial audio is rendered with the same low latency as the visuals.

Sensor Fusion and Tracking

To accurately place the user within the virtual world, the headset must continuously track the user’s head, hands, and eyes.

This requires processing massive amounts of data from an array of sensors, including:

- Inertial Measurement Units (IMUs): Gyroscopes and accelerometers that track rotation and movement.

- Cameras: Used for Inside-Out Tracking, where the device maps its own position relative to the physical environment.

- Eye-Tracking Sensors: Used for foveated rendering and social presence (avatar eye movement).

The process of combining and synchronizing this disparate data stream is called Sensor Fusion.

This is a highly computationally intensive task that must be executed with near-zero latency to prevent tracking errors and drift [12].

Specialized Image Signal Processors (ISPs) handle the raw camera data, while dedicated sensor fusion processors within the SoC combine the IMU and visual data to produce a single, highly accurate, and low-latency position and orientation output.

Haptic Feedback and Control

The sense of touch, or haptics, is the final frontier of immersion.

Providing realistic tactile feedback requires precise, low-latency control over actuators in controllers or specialized suits.

This is typically managed by low-power, dedicated Microcontrollers (MCUs) [13].

While the main SoC handles the high-level physics simulation that determines what the user should feel, the MCU handles the low-level, real-time signal generation to the haptic motors.

The MCU’s simplicity and speed ensure that the tactile response is instantaneous, further cementing the user’s sense of presence.

The Future of Silicon: Driving the Next Wave of Immersion

The demands of the Metaverse are not static; they are constantly escalating.

As virtual worlds become more complex, photorealistic, and populated, the microprocessors of today will quickly become inadequate.

This future is driving a fundamental shift in the semiconductor industry, moving away from a “one-size-fits-all” approach to a highly specialized, domain-specific architecture [14].

Domain-Specific Architectures (DSAs)

The trend is clear: the future of Metaverse computing lies in Domain-Specific Architectures (DSAs).

Instead of relying on general-purpose CPUs and GPUs to handle all tasks, designers are creating specialized hardware blocks for every major function—from ray tracing and AI inference to video decoding and sensor fusion.

This approach maximizes efficiency by tailoring the silicon precisely to the mathematical operations it needs to perform.

For example, a dedicated Video Processing Unit (VPU) can decode and encode video streams for cloud-based Metaverse applications far more efficiently than a general-purpose CPU core.

Advanced Packaging and Chiplets

To overcome the physical limitations of scaling a single, monolithic chip, the industry is embracing advanced packaging techniques and the chiplet design model.

Chiplets are small, specialized dies (chips) that are interconnected on a single package, acting as a single, powerful processor [15].

This allows designers to mix and match the best components—a CPU chiplet built on one process node, a GPU chiplet on another, and a specialized AI chiplet—to create a highly optimized, powerful, and cost-effective system.

This modularity is essential for meeting the diverse and rapidly changing requirements of Metaverse hardware.

The Role of Cloud and Edge Computing

While the focus has been on the microprocessors in the headset (the edge device), the Metaverse also requires immense computational power in the cloud.

Cloud-based microprocessors, particularly high-end GPUs and massive AI clusters, are used for:

- Cloud Rendering: Streaming high-fidelity graphics to less powerful edge devices, offloading the most intensive rendering tasks.

- Massive Simulation: Running the physics and AI for large, persistent virtual worlds that are too complex for a single headset to manage.

- AI Model Training: Training the vast, complex AI models that govern the behavior of the virtual world and its inhabitants [16].

The microprocessors at the edge and in the cloud must work together seamlessly, with high-speed, low-latency connectivity (like 5G and 6G) acting as the nervous system connecting the two computational domains.

Conclusion: The Unseen Engine of Immersion

The Metaverse, in its ultimate form, is a testament to the power of modern microprocessors.

It is a technological ecosystem where the performance of the silicon directly translates into the quality of the human experience.

The journey from a clunky, high-latency virtual reality to a seamless, photorealistic, and multi-sensory Metaverse is a story of relentless innovation in chip design.

From the parallel processing might of the GPU to the power-sipping efficiency of the custom SoC and the cognitive power of the AI accelerator, microprocessors are the unseen engines that make immersion possible.

They are the foundation upon which the digital frontier is being built, constantly pushing the boundaries of speed, efficiency, and specialization to deliver a world where the line between the physical and the virtual is increasingly blurred.

The future of the Metaverse is, quite literally, etched in silicon.

References

- [1] Chip Challenges In The Metaverse – SemiEngineering

- [2] How the Metaverse is Redefining Chip Design – Sourcengine

- [3] Hardware Recommendations for Virtual Reality – Puget Systems

- [4] Chips for the Metaverse: Bridging Reality and Virtual Worlds – Medium

- [5] Meta Unveils Custom AI Chips, Igniting a New Era for Metaverse and AI Infrastructure – Financial Content

- [6] Meta Unveils Custom AI Chips, Igniting a New Era for Metaverse and AI Infrastructure – Financial Content

- [7] The metaverse and communication service providers – Nokia

- [8] D1: Network Requirements for Metaverse Services – Metaverse Standards Forum

- [9] Oculus Asynchronous Spacewarp Explai