The C programming language has been the bedrock of the embedded world, a testament to its efficiency and direct control over hardware.

From the microcontrollers governing our smart homes to the complex systems in aerospace and automotive industries, C’s influence is pervasive and undeniable.

Yet, as the Internet of Things (IoT) explodes and embedded devices become increasingly connected and mission-critical, the industry is grappling with a profound and systemic security crisis.

This crisis is not rooted in complex zero-day exploits, but in a fundamental flaw inherent to C’s design: the lack of memory safety.

This single vulnerability class is responsible for the vast majority of security breaches, turning the language’s greatest strength—manual memory management—into its most dangerous liability.

The solution, increasingly championed by government agencies and tech giants alike, is a radical shift to memory-safe languages, with Rust emerging as the most compelling and performant alternative.

This is not merely a technical debate; it is an imperative for a secure digital future.

The C Legacy: A Faustian Bargain of Performance and Peril

C’s design philosophy is rooted in providing the developer with maximum freedom and minimal abstraction.

It offers a thin abstraction layer over the hardware, allowing developers to write highly optimized code that extracts maximum performance from resource-constrained devices.

The ability to manipulate memory directly via pointers is the key to this power, enabling the creation of efficient data structures and low-level drivers.

However, this freedom comes at a catastrophic cost: the burden of memory safety is placed entirely on the human developer.

The sheer complexity of tracking the lifetime and validity of every memory allocation across a large, concurrent codebase is a task proven to be beyond human capacity for error-free execution.

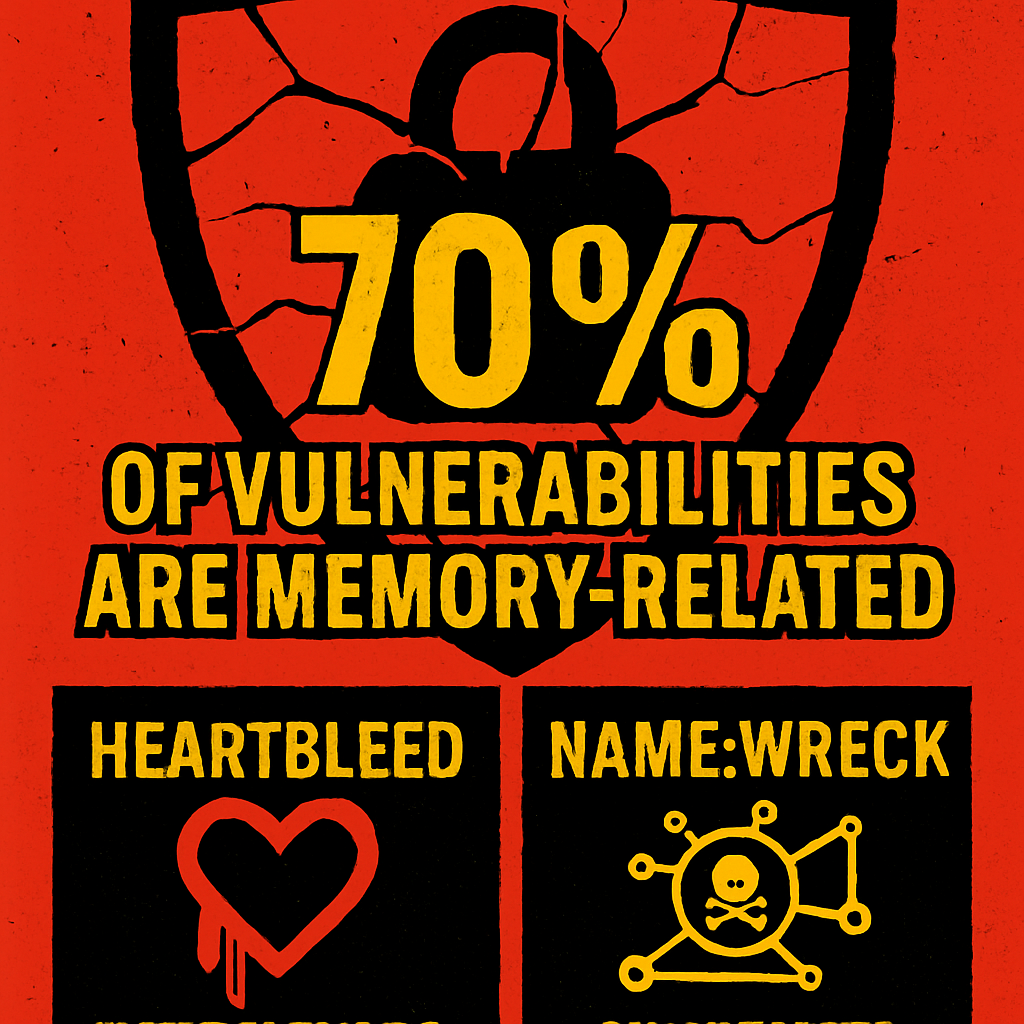

The data supporting this claim is stark and irrefutable.

Reports from Microsoft, Google, and the U.S. National Security Agency (NSA) consistently conclude that 70% or more of all high-severity security vulnerabilities in C and C++ codebases are directly attributable to memory safety issues [1] [2].

This figure represents a systemic failure, not a series of isolated mistakes.

The Four Horsemen of C Vulnerabilities

The lack of memory protection in C gives rise to four primary classes of vulnerabilities that have plagued the industry for decades.

1. Buffer Overflows and Over-Reads: The Classic Hijack

A buffer overflow occurs when a program writes data past the end of a fixed-size buffer, corrupting adjacent data on the stack or heap.

This is the quintessential vulnerability used to achieve Remote Code Execution (RCE), allowing an attacker to inject and execute their own malicious code.

The infamous Heartbleed bug, which exposed sensitive data from millions of web servers, was a textbook example of a buffer over-read, demonstrating the devastating global impact of a single C memory flaw [3].

2. Use-After-Free (UAF): The Ghost in the Machine

A UAF bug occurs when a program attempts to use a pointer to memory that has already been deallocated and potentially re-used for a different purpose.

This leads to unpredictable behavior, which an attacker can exploit to manipulate the program’s state or execute arbitrary code.

In 2021, a UAF vulnerability in the C-based Nucleus RTOS, part of the NAME:WRECK set of flaws, exposed billions of devices, including critical medical and industrial equipment, underscoring the severity of this bug class in embedded systems [4].

3. Dangling Pointers: The Unreliable Reference

These pointers refer to memory that has been freed, creating a potential security hole identical to UAF if the memory is later reallocated.

The complexity of managing pointers across asynchronous tasks, interrupts, and shared memory in an embedded RTOS makes the creation of dangling pointers almost inevitable in large C projects.

4. Double-Free Errors: The Corrupted Heap

Attempting to free the same block of memory twice can corrupt the heap’s internal data structures, leading to a denial-of-service condition or, more dangerously, allowing an attacker to control the memory allocator’s behavior to facilitate further exploitation.

The Limits of Mitigation

The C community has developed a robust set of mitigation techniques, including static analysis tools, runtime checks, and compiler defenses like stack canaries and ASLR.

While necessary, these are merely external patches for an internal problem.

They add significant overhead to the development process, increase binary size, and, most critically, they do not eliminate the root cause of the vulnerabilities; they only make them harder to exploit.

The U.S. Cybersecurity and Infrastructure Security Agency (CISA) has explicitly stated that relying on these mitigations is insufficient and that the industry must move to memory-safe languages to achieve true security [5].

Rust’s Revolution: The Compiler as the Security Guard

Rust was engineered from the ground up to solve the memory safety crisis without compromising on the performance required for systems programming.

It achieves this through a radical, yet elegant, system of compile-time checks that enforce strict memory rules, effectively eliminating the entire class of C’s most dangerous vulnerabilities before the code ever runs.

This is the core value proposition of Rust: security by construction.

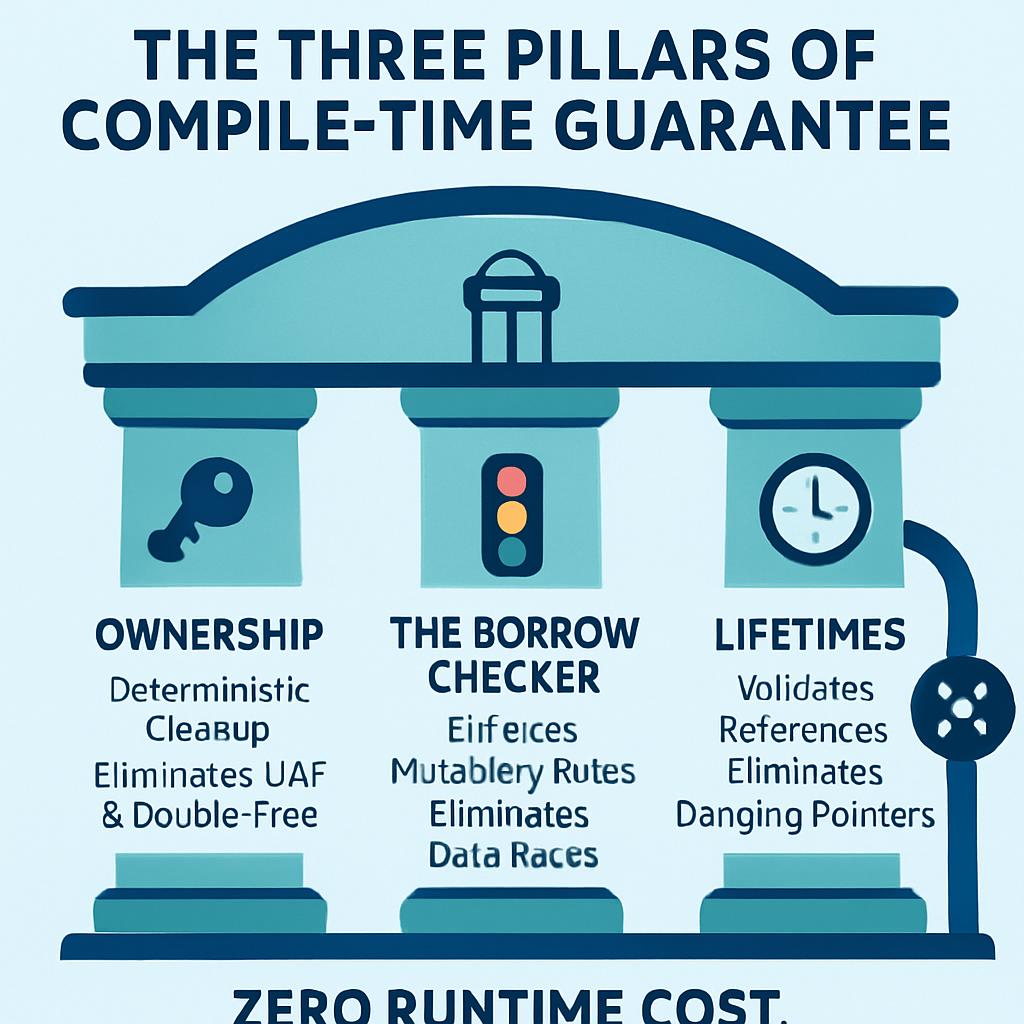

The Three Pillars of Guaranteed Safety

Rust’s memory safety is built upon three interconnected concepts that form its unique ownership system.

1. Ownership: Deterministic Resource Management

In Rust, every value has a single, clear owner, and when that owner goes out of scope, the value is automatically dropped, and its memory is freed.

This deterministic destruction, a form of Resource Acquisition Is Initialization (RAII), eliminates the need for manual free calls, thereby preventing UAF and double-free errors.

The compiler manages the memory lifecycle, ensuring that resources are cleaned up precisely and safely.

2. The Borrow Checker: The Gatekeeper of Concurrency

The borrow checker is the heart of Rust’s safety model, enforcing a set of rules for references (borrows) to data.

It ensures that at any given time, a piece of data can have either one mutable reference (write access) or any number of immutable references (read access), but never both simultaneously.

This rule is a compile-time guarantee that data races are impossible, a monumental achievement for concurrent programming in embedded systems where interrupts and multi-threading are common.

By catching these concurrency bugs at compile time, Rust drastically improves both the security and the reliability of real-time embedded software.

3. Lifetimes: Validating References in Time

Lifetimes are a mechanism the compiler uses to ensure that a reference never outlives the data it points to.

The compiler analyzes the scope of the data and the scope of the reference, and if it detects a potential dangling pointer scenario, it refuses to compile the code.

This compile-time validation eliminates the entire class of dangling pointer vulnerabilities, ensuring that all references are always valid.

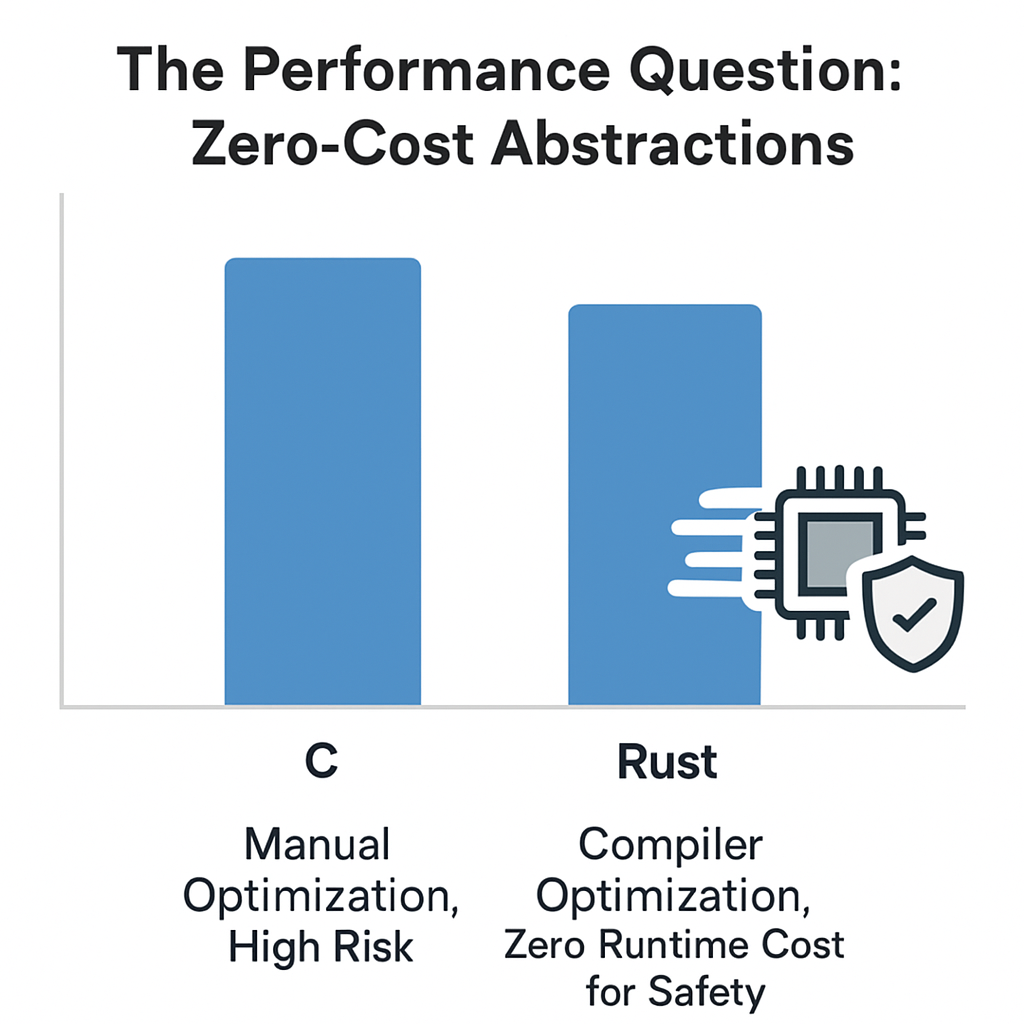

The Performance Question: Zero-Cost Abstractions

For embedded developers, the question of performance is paramount.

The fear is that the safety mechanisms of Rust must introduce runtime overhead, but this is where Rust’s design truly shines.

Rust’s safety checks are performed entirely at compile time, meaning the resulting machine code has zero runtime cost for memory safety.

There is no garbage collector, no runtime checks for array bounds (in safe code), and no virtual machine.

The performance of well-written Rust code is consistently on par with, and often exceeds, that of C and C++ because the compiler is free to perform more aggressive optimizations, knowing that memory access is guaranteed to be safe.

Furthermore, Rust’s suitability for the smallest devices is proven by its robust no_std environment, which allows for bare-metal programming without the standard library, resulting in binary sizes comparable to C.

The Industry’s Mandate: Adoption and Momentum

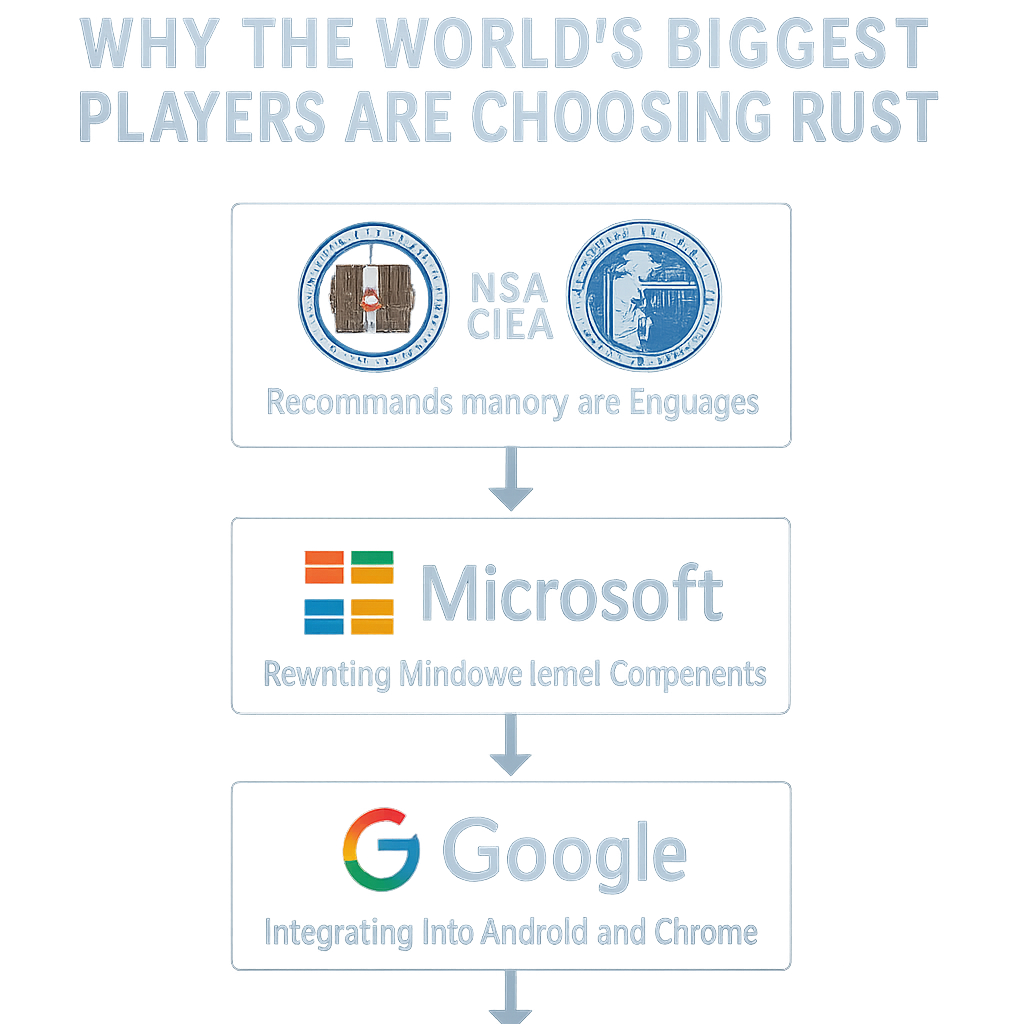

The shift to Rust is no longer a niche movement; it is a strategic mandate driven by the world’s largest technology and security organizations.

Government and Defense

The U.S. government has taken a definitive stance.

The NSA and CISA have published explicit guidance urging developers to adopt memory-safe languages to reduce the national attack surface [2] [5].

This endorsement is a powerful signal to the defense and critical infrastructure sectors, where embedded systems are the backbone of operations.

Tech Giants and Operating Systems

Major tech companies are actively integrating Rust into their most security-critical products.

Google is using Rust for components of Android and the Chrome browser, citing the elimination of memory safety bugs as the primary driver [6].

Microsoft has rewritten parts of Windows, including the kernel, in Rust, reporting that this change has effectively eliminated entire classes of security vulnerabilities in those components [7].

Perhaps most significantly for the embedded world, the Linux kernel, the operating system for countless embedded devices, is now officially accepting Rust code, paving the way for safer drivers and kernel modules [8].

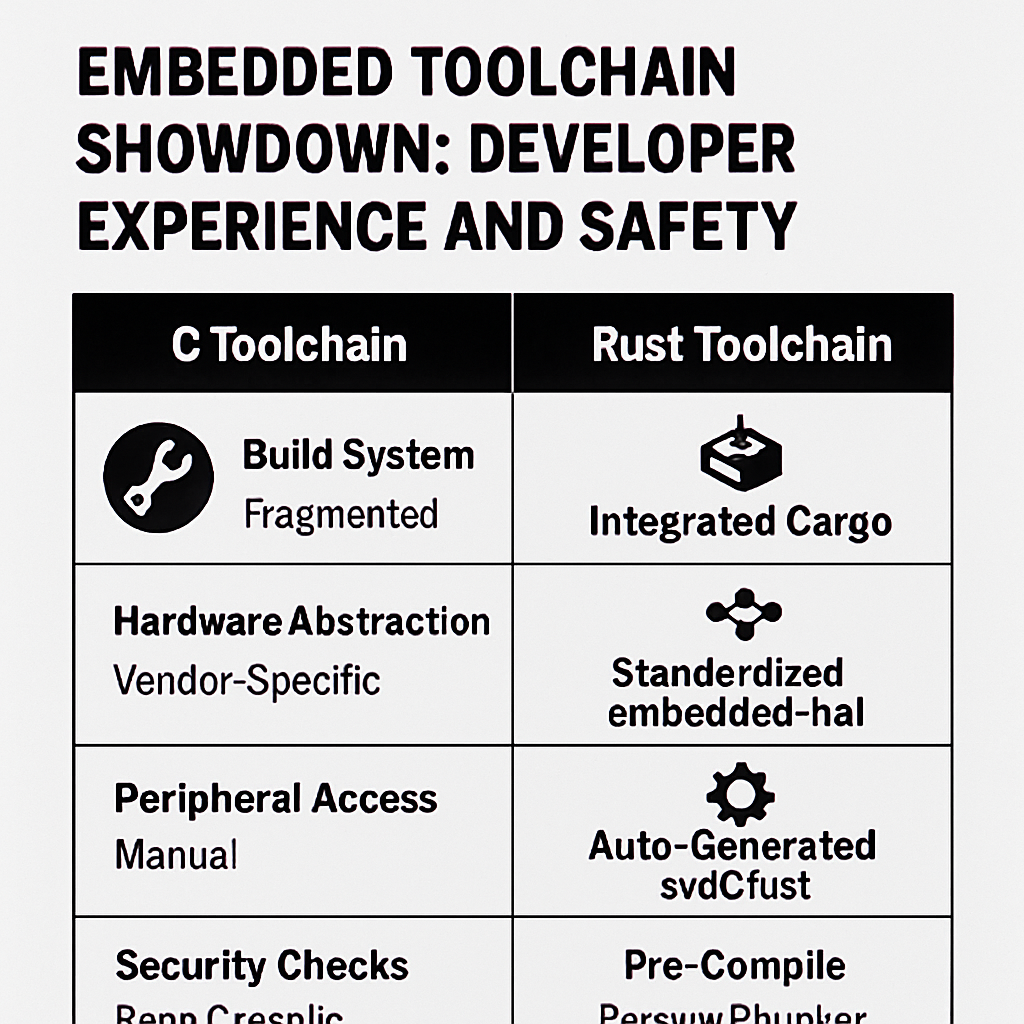

The Embedded Ecosystem

The Rust embedded ecosystem is maturing at an unprecedented pace.

The embedded-hal provides a crucial abstraction layer, ensuring that code written for one microcontroller can be easily ported to another.

Real-time frameworks like RTIC (Real-Time Interrupt-driven Concurrency) offer a safe, high-level approach to concurrent programming that is formally verifiable and built on Rust’s safety guarantees.

This robust tooling is rapidly closing the gap with C’s ecosystem, making Rust a viable choice for almost any new embedded project.

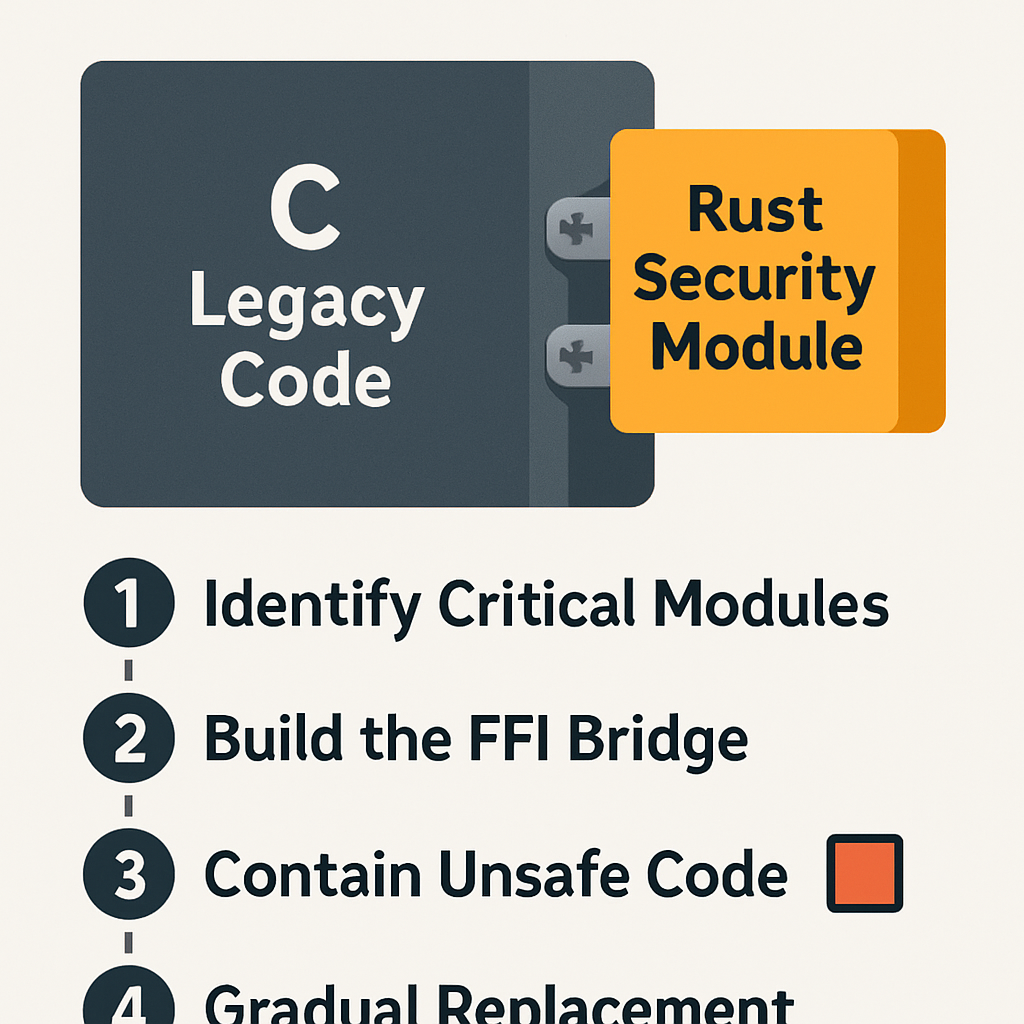

The Pragmatic Path Forward: Incremental Adoption

The reality of the embedded industry is that C codebases are massive and cannot be rewritten overnight.

The transition to Rust must be pragmatic and incremental.

Interoperability: The FFI Bridge

Rust provides a powerful Foreign Function Interface (FFI) that allows for seamless interoperability with existing C libraries and drivers.

This enables a “best of both worlds” approach, where new, security-critical components—such as network stacks, cryptographic libraries, or user-facing logic—can be written in safe Rust and linked with the legacy C code.

This strategy allows organizations to immediately begin reducing their attack surface without a costly, full-scale migration.

The Unsafe Escape Hatch

Rust includes an unsafe keyword, which allows developers to bypass the compiler’s safety checks and perform C-like operations, such as dereferencing raw pointers.

This is not a flaw, but a necessary escape hatch that allows for low-level hardware interaction and FFI integration.

The key is that unsafe code is strictly localized and clearly marked, forcing developers to manually verify the safety of only those small, contained blocks, rather than the entire codebase.

Conclusion: Building Security by Design

The choice between C and Rust for embedded systems is a choice between maintaining the status quo of systemic vulnerability and embracing a future of security by design.

C, for all its historical merits, is fundamentally ill-equipped to handle the security demands of the modern, connected world.

Rust offers a revolutionary solution: the performance and control of a systems language, but with memory safety guaranteed by the compiler, not by fallible human effort.

For any organization building the next generation of embedded devices—from medical implants to industrial controllers—the strategic decision is clear.

By adopting Rust, developers are not just choosing a new language; they are choosing to eliminate the most common source of catastrophic security flaws, ensuring their products are not just fast, but fundamentally trustworthy.

The time for incremental patching is over; the era of memory-safe embedded systems has begun.

References

[1] Microsoft Security Response Center. (2019). Why Rust for safe systems programming

[2] National Security Agency (NSA). (2022). NSA Releases Guidance on How to Protect Against Software Memory Safety Issues

[3] CISA. (2021). Heartbleed Vulnerability

[4] Forescout Research Labs. (2021). NAME:WRECK

[5] CISA. (2023). Memory Safe Languages: Reducing Vulnerabilities in Modern Software Development

[6] Google Security Blog. (2021). Rust in the Android platform

[7] Microsoft Security Response Center. (2019). Using Rust in Windows

[8] InfoQ. (2022). Linux 6.1 Officially Adds Support for Rust in the Kernel