In today’s rapidly evolving technological landscape, continuous IT training isn’t just a perk; it’s a necessity.

Organizations pour billions into upskilling their tech teams, from cybersecurity workshops to cloud computing certifications [1].

But here’s the critical question: are these investments truly paying off?

Are your employees genuinely learning, applying new skills, and driving tangible business results?

Or are you simply checking a box, hoping for the best?

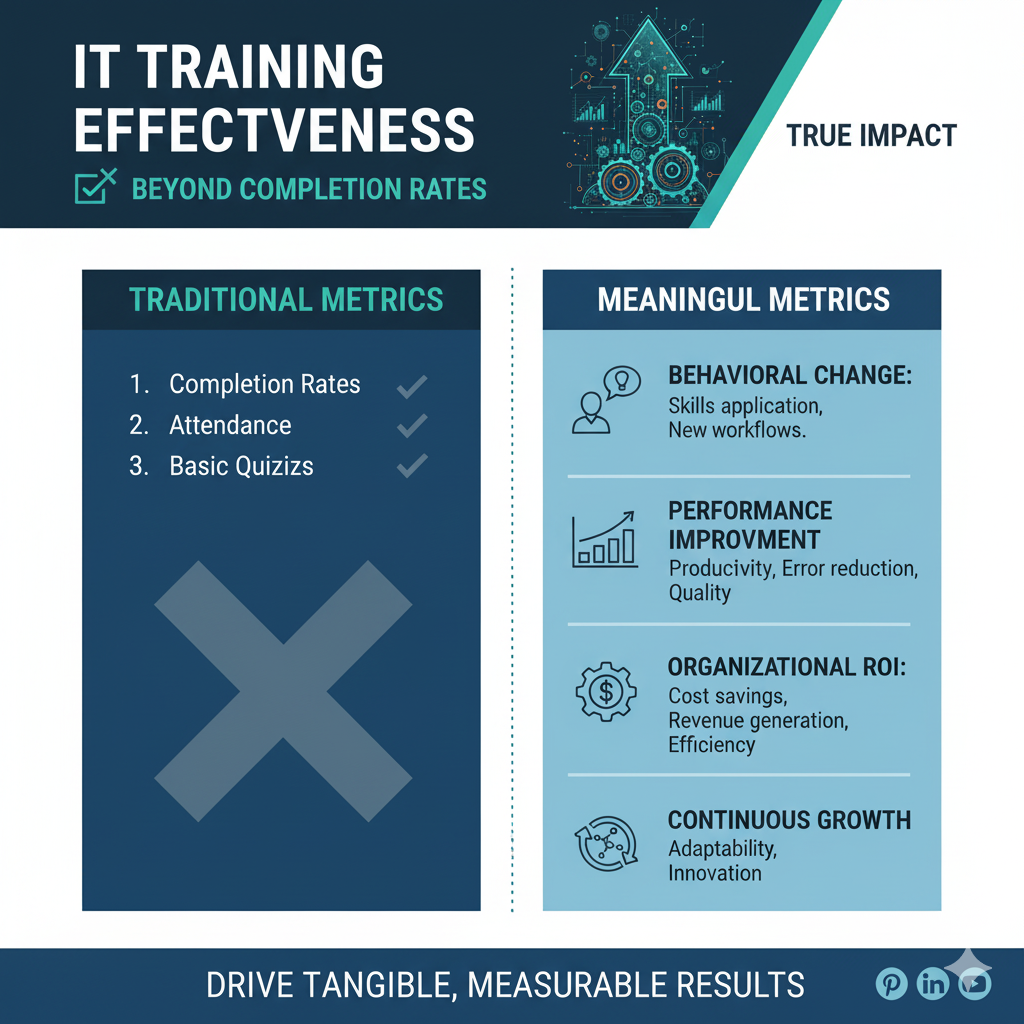

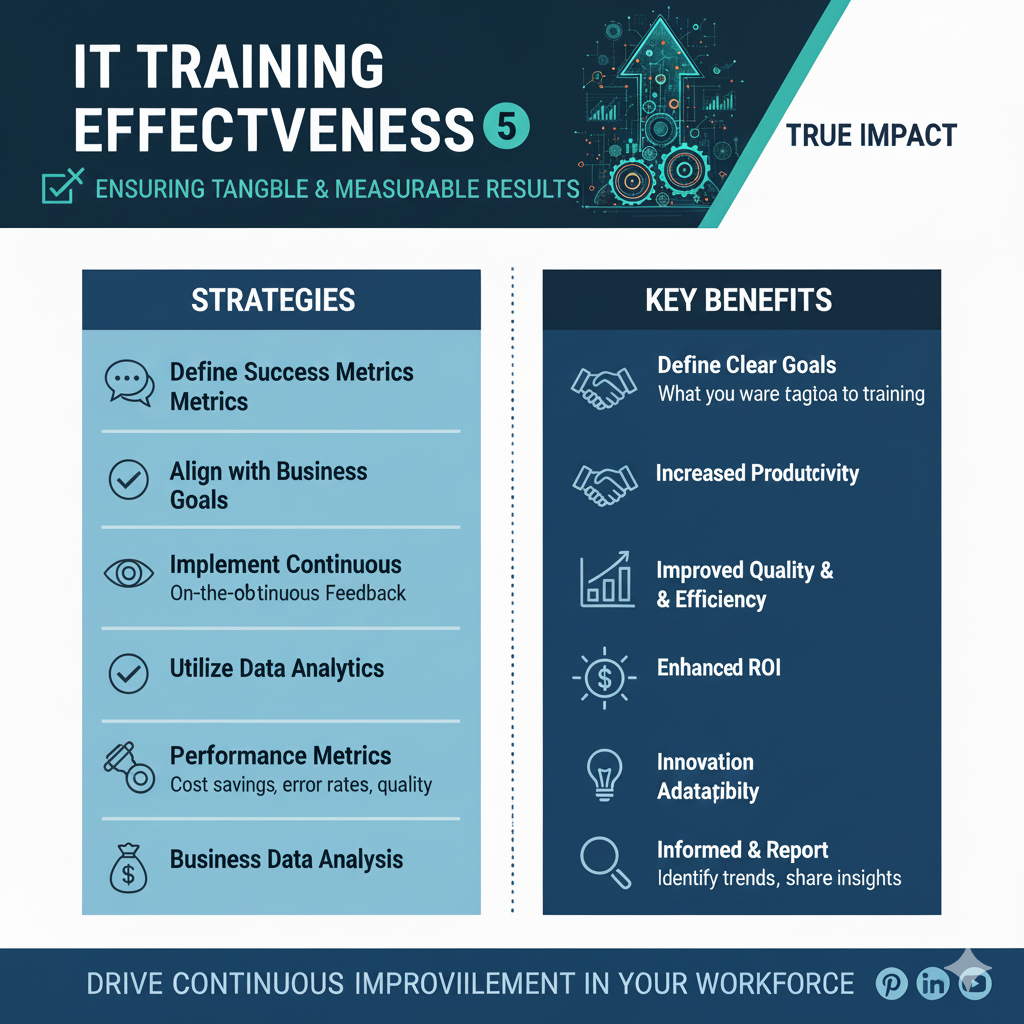

Measuring the effectiveness of IT training goes far beyond tracking attendance or completion rates.

It’s about understanding the real impact on individual performance, team productivity, and ultimately, your organization’s bottom line.

Without robust measurement, you’re navigating blind, unable to identify what works, what doesn’t, and where your valuable resources should be allocated for maximum impact.

It’s a journey from expense to strategic necessity, transforming training from a cost center into a demonstrable value driver.

The Challenge: Why IT Training Measurement Often Falls Short

Many organizations struggle with effectively measuring IT training.

The reasons are varied, but often include a lack of clear objectives, insufficient tools, or simply not knowing where to start.

It’s easy to get caught up in the immediate task of delivering training, overlooking the crucial step of evaluating its long-term effects.

This oversight can lead to wasted resources, frustrated employees, and a stagnant skill set that fails to keep pace with technological advancements.

One common pitfall is focusing solely on Level 1 (Reaction) metrics, such as participant satisfaction surveys.

While valuable for immediate feedback, these don’t tell you if learning actually occurred or if it translated into improved job performance.

To truly understand effectiveness, we need to delve deeper, employing models and methods that provide a holistic view of the training’s journey from concept to impact.

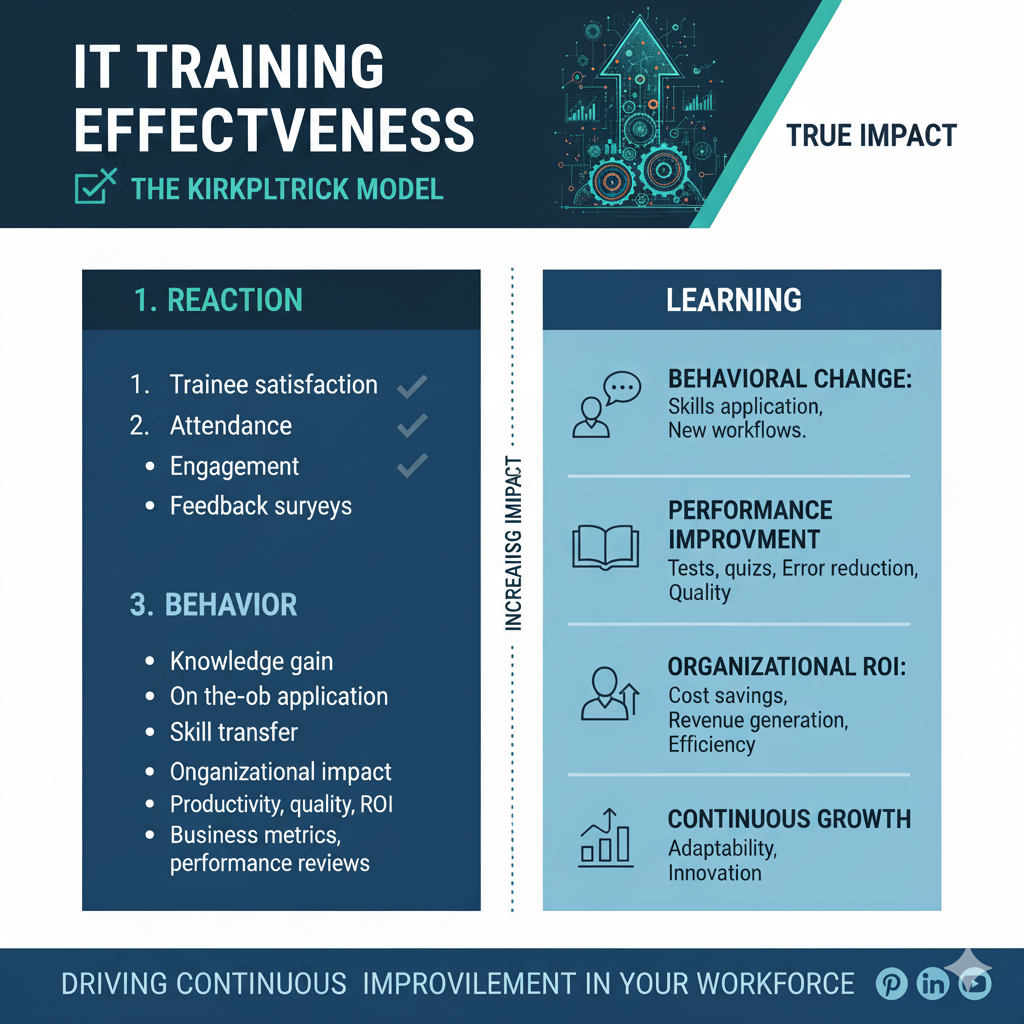

The Gold Standard: Kirkpatrick’s Four Levels of Training Evaluation

When it comes to evaluating training, the Kirkpatrick Model is widely recognized as the gold standard [2].

Developed by Donald Kirkpatrick in the 1950s and continually refined, this model provides a comprehensive framework for assessing the effectiveness of any training program, including those in IT.

It encourages a structured approach, moving from immediate reactions to long-term results.

Let’s break down each of the four levels:

Level 1: Reaction – Did They Like It?

This is the most basic level and measures how participants react to the training.

Did they find it engaging, relevant, and favorable?

While often dismissed as superficial, positive reactions are crucial for motivation and engagement.

If participants don’t like the training, they’re less likely to learn or apply it.

How to Measure: Surveys, feedback forms, informal discussions, and post-training questionnaires are common tools.

For IT training, this might include questions about the instructor’s expertise, the clarity of technical content, the quality of lab environments, and the overall learning experience.

Level 2: Learning – Did They Learn It?

This level assesses the degree to which participants acquired the intended knowledge, skills, attitudes, confidence, and commitment.

It moves beyond mere satisfaction to determine if actual learning took place.

How to Measure: Pre- and post-training assessments, quizzes, exams, simulations, and practical exercises are effective.

For IT training, this could involve coding challenges, troubleshooting scenarios, certification exams, or demonstrating proficiency in new software or systems. Measuring confidence and commitment can also be an early predictor of future behavior [2].

Level 3: Behavior – Are They Using It?

This is where the rubber meets the road.

Level 3 measures the degree to which participants apply what they learned during the program back in their work environment. It’s not enough to know; they must do.

How to Measure: Observational assessments, peer reviews, manager feedback, 360-degree feedback, and performance evaluations are key.

In an IT context, this might involve observing how a newly trained network engineer configures a router, how a developer implements a new security protocol, or how a support specialist uses a new diagnostic tool.

It’s vital to measure behavior within a reasonable timeframe (e.g., 90 days post-training) to capture the transfer of learning [2].

Level 4: Results – What Impact Did It Have?

The highest level of evaluation, Level 4, measures the degree to which targeted organizational outcomes occur as a result of the training.

This is about the ultimate impact on business goals and strategic objectives.

How to Measure: This can be the most challenging but also the most rewarding level to measure.

Metrics might include increased productivity, reduced errors, improved system uptime, faster project completion, enhanced security posture, cost savings, or increased customer satisfaction.

The key is to start with the desired results and work backward, identifying leading indicators that will signal success [2].

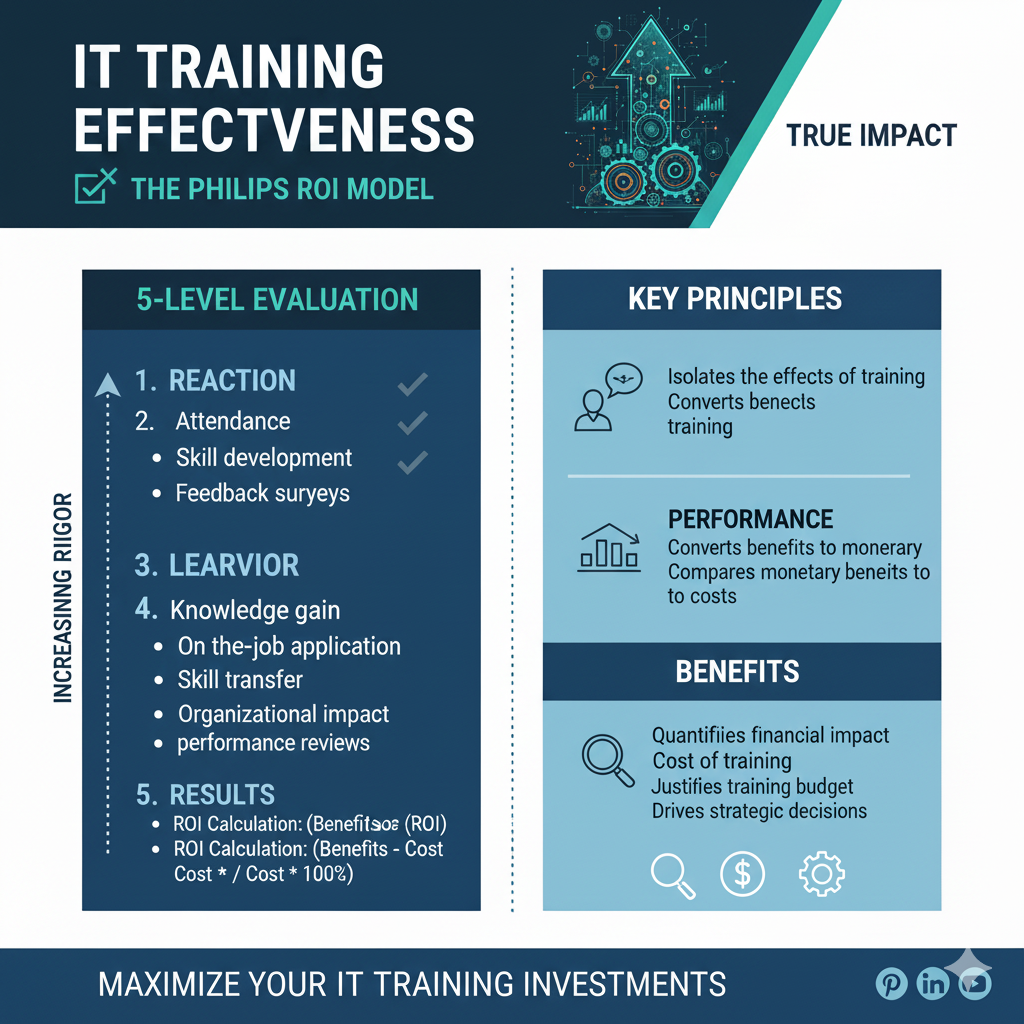

Beyond Kirkpatrick: The Phillips ROI Model

While the Kirkpatrick Model is excellent for understanding the impact of training, the Phillips ROI Model takes it a step further by quantifying the financial return on investment.

It adds a fifth level to Kirkpatrick’s framework: Return on Investment (ROI).

The basic formula for calculating training ROI is [3]:

“`

ROI (%) = (Net Benefits of Training / Training Costs) x 100

“`

Net Benefits of Training are calculated by subtracting the training costs from the financial gains achieved through improved productivity, reduced downtime, error reduction, or other measurable outcomes.

For example, if a cybersecurity training program reduces data breaches, the cost savings from avoiding those breaches would contribute to the net benefits.

Training Costs include all direct and indirect expenses, such as course fees, instructor salaries, materials, travel, and employee time away from work.

Calculating ROI helps translate the qualitative benefits of training into a language that resonates with business leaders, demonstrating that training is not just an expense but a strategic investment with measurable financial returns.

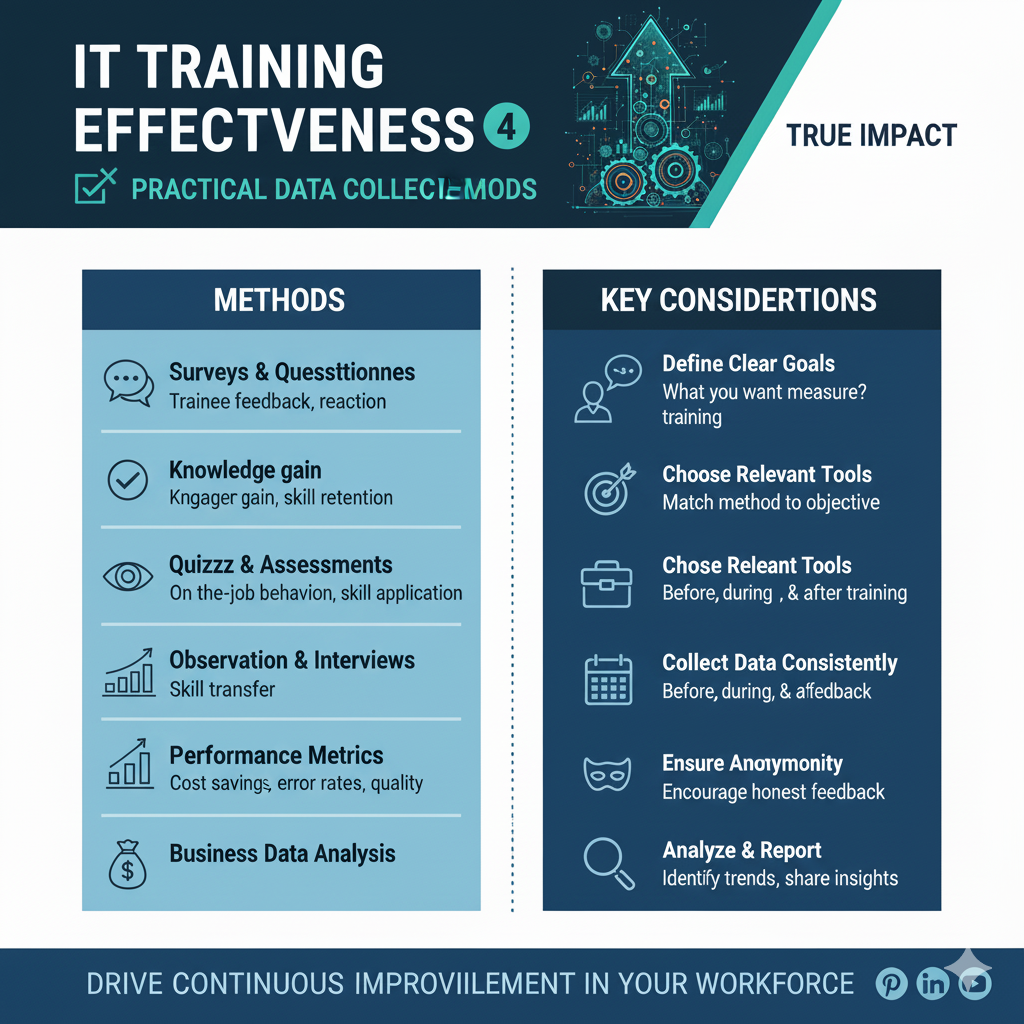

Practical Methods for Data Collection in IT Training

To effectively apply these models, you need robust data collection methods. Here are some practical approaches for IT training:

Pre- and Post-Training Assessments: Essential for Level 2 (Learning). These can be quizzes, hands-on labs, or simulated scenarios to gauge knowledge and skill acquisition before and after the training.

Surveys and Questionnaires: Primarily for Level 1 (Reaction) but can also gather insights for Level 2 (Learning) and Level 3 (Behavior) regarding perceived skill improvement and intent to apply.

Observational Assessments: Crucial for Level 3 (Behavior). Managers or peers can observe employees applying new skills in real-world IT tasks, using checklists or rubrics to ensure consistency.

Performance Evaluations: Integrate training outcomes into regular performance reviews.

Look for improvements in KPIs directly related to the training content, such as bug fix rates, system uptime, or project delivery speed.

Interviews and Focus Groups: Provide qualitative data for all levels.

One-on-one interviews with trainees and their managers can uncover nuances in learning transfer and behavioral changes.

Focus groups can explore collective perceptions and challenges.

Learning Management System (LMS) Analytics: Modern LMS platforms offer rich data on course completion, quiz scores, time spent on modules, and even engagement levels.

This data is invaluable for Level 1 and Level 2 evaluations.

Business Metrics Tracking: For Level 4 (Results) and ROI. Work with business units to identify and track relevant metrics before and after training.

This could involve IT service desk ticket resolution times, system security audit results, or development cycle times.

Continuous Improvement: The Iterative Nature of Measurement

Measuring IT training effectiveness isn’t a one-time event; it’s an ongoing process.

The insights gained from evaluation should feed back into your training design and delivery, creating a continuous improvement loop.

If a training program isn’t yielding the desired results, the data will help you pinpoint why—whether it’s the content, the delivery method, or a lack of post-training support.

By consistently measuring, adapting, and refining your IT training initiatives,

you can ensure that your workforce remains at the cutting edge, driving innovation, efficiency, and resilience across your organization.

It’s about moving beyond the checkbox and truly unlocking the full potential of your IT talent.

References

[1] AIHR. (n.d.). *Measuring Training Effectiveness: A Practical Guide*. Retrieved from

[2] Kirkpatrick Partners, LLC. (n.d.). *The Kirkpatrick Model*. Retrieved from

[3] Tooling U-SME. (2025, April 4). *Calculating the ROI of Training: From Expense to Necessity*. Retrieved from [